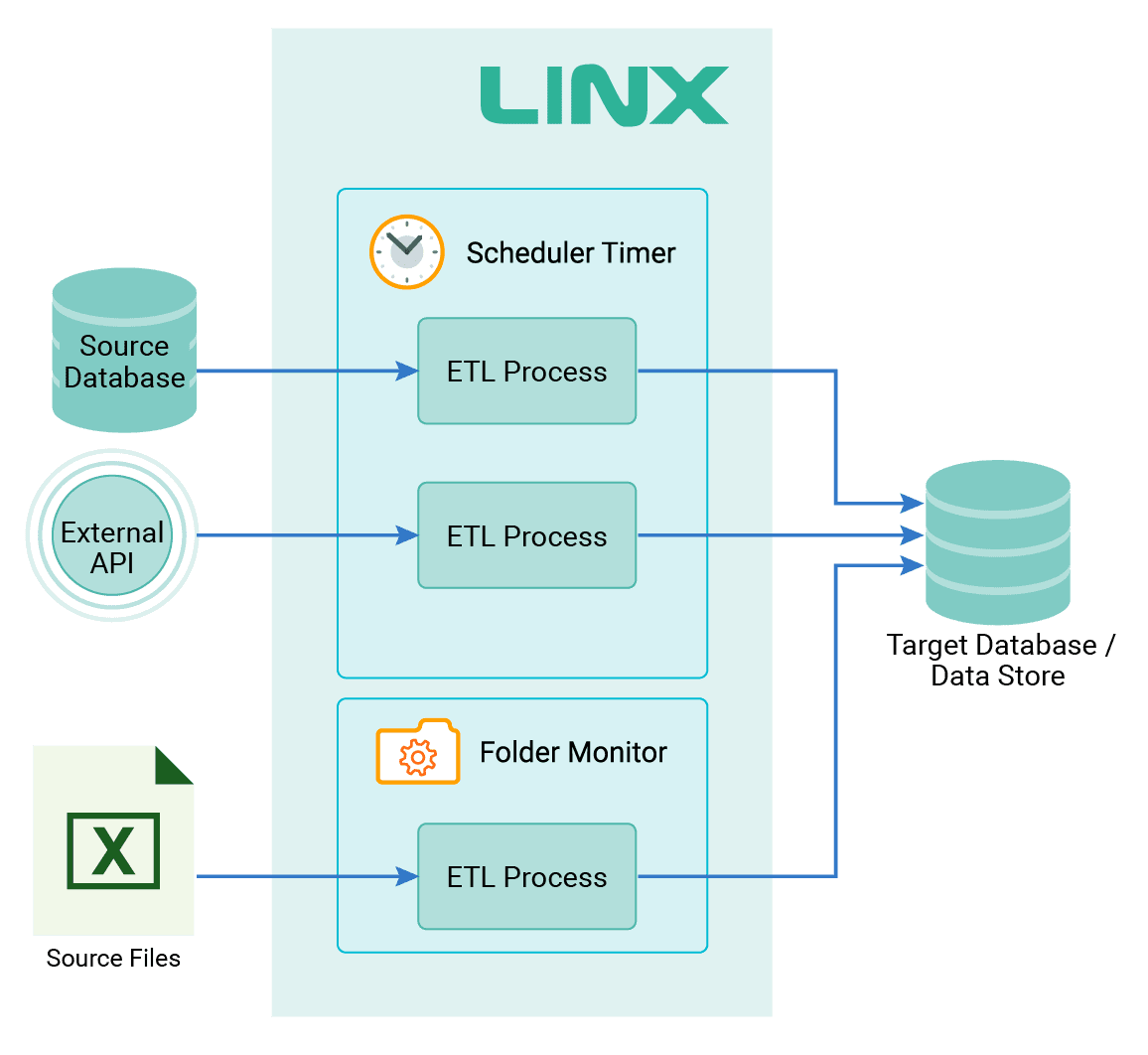

Extract, Transform, Load (ETL)

Linx offers comprehensive data mapping capabilities to create data pipelines with multifaceted transformations and automatic control and scheduling of workflows. It provides features to create simple or complex data connectors to many different data sources, including on-premises databases, SaaS applications or file systems.

What you can do with Linx

- Build comprehensive, flexible, and customizable ETL processes.

- Aggregate, synchronize, and migrate data across systems and databases.

- Automate the created processes via triggers (directory changes, timers, and schedulers).

- Build fully re-runnable and idempotent processes and functions.

Common ETL solutions

- ETL Processes and Data pipelines.

- Data migration.

- Data distribution.

The developer experience

- Easily connect to database, file, or API data sources.

- Auto generate SQL CRUD statements.

- Auto generate csv file imports.

- Powerful scheduling features.

- Comprehensive debugging and testing.

- Build API access to ETL processes.

Data loading on schedule:

Data can be loaded via a schedule or on an event, for example, when a file is dropped in a folder. Once the process is triggered, read the data, apply transformations, load the data into the target data store and archive the file. Additional logging can be built into the ETL process.

ETL processes can include;

- Interaction with a file (csv, flat text, excel, pdf, JSON, XML).

- Interacting with databases (SQL Server, Oracle, ODBC, OLE DB and MongoDB).

- Calling REST, GraphQL and SOAP web services.

- Interacting with mailboxes to read emails and retrieve attachments.

- Handling compression (zip and unzip).

- Execute custom SQL and TSQL queries and stored procedures.

- File and folder management.

- Format or transform data using an expression editor.

- Using functions to manipulate data, strings and dates.

- Calculations and mathematical operations.

- Logic checks.

- Transfer data with message queues (RabbitMQ or Kafka).